|

|

|

|

In the Forums... |

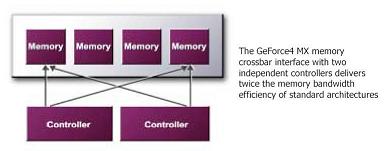

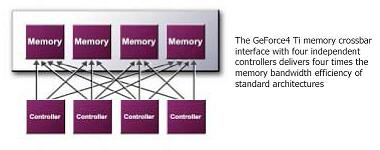

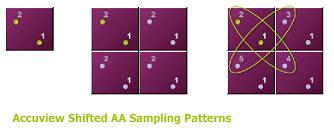

Posted: June 20, 2002 Written by: Adam Honek Visiontek Geforce4 x 4 Nvidia Geforce4 - Technology in words Nvidia has over time thrown out a lot of technical jargon that has usually been used to refer to its new achievements which lay hidden deep within their latest silicon chipsets. This time is no different with new versions of their existing technologies first seen in the Geforce3. The die itself is still based on a 0.15 micron process. In practice you can expect a GF4 Ti4600 to get somewhat warmer than a GF3 Ti500 due to the higher 300MHz clock speed of the former. A die remains only one topic technology wise so without much further wait let us focus in on the prime candidates newly introduced in the Geforce4 series. - nfiniteFX II Engine (only Geforce4 Ti models) The nfiniteFX engine was first introduced in the Geforce3 series and has now waited long enough to have a face lift. The changes or change should we say is nothing ground breaking but still worth noting. Whereas we still get one pixel shader and an almost similar architecture including a same pipeline order/layout, what we also get is a 2nd vertex shader. There is no denial that games are getting much more realistic each year, and this in turn puts extra strain on the 3D card. This is where the second vertex shader kicks in; scenes with heavy use of special effects would benefit most in region up to 50% over the Geforce3. Scenes that are less demanding would naturally bring a smaller boost in speed but given that realism in games is set to improve with no end in sight having the extra horsepower can only be a good thing. We need to look upon this not only from a performance angle yet the benefits too. In this case it is also sensible to point out that given there is more 3D power should a game need it, there is also room for adding more affects without the impacting frame rates as heavily. The bottom line is that Nvidia is trying to kill two birds with one stone, gain speed and enable more complex scenes to get the resources they need. What's more they do this with making the die only 5% larger than that in the Geforce3, this shows good use of the die space available including a component layout that rejects few precious areas as wasted unusable space. In concluding both programmable pixel and vertex shaders continue to offer the flexibility developers have come familiar with in the Geforce3 series but now have a greater backing of power ready to handle more 3D complex challenged games.  - Lightspeed Memory Architecture II When LMA was initially introduced back in early 2001 in the Geforce3 chipset its aim was to overcome one of the biggest bottlenecks in designing modern 3D accelerators, namely memory bandwidth requirements. Now in its second incarnation LMA II enhances the "Crossbar" architecture to permit faster access of memory segments within video memory thus increasing the speed at which graphics may be rendered. It does this is many ways including avoiding use of bandwidth for pixels that the gamer user will never see also further enhanced by a quad cache implementation. Greater texture compression techniques reduce memory requirements for realistic highly detailed textures putting less strain on bandwidth within the 3D scene, especially a higher resolutions. Both the MX and Ti series include LMA II though the former doesn't consume it as effectively due to being restricted to the use of 2 banks rather than 4 meaning 64bit Vs 128bit data path access to video memory. One could argue this does no harm due to the MX's inability to render graphics fast enough for it to bring any real performance to the screen; after all it's not meant to be used for ultra high resolutions, that is still safely the role of the Ti series.   - AccuView Anti-Aliasing Back in the year 2000 we saw a new trend for graphics cards to support FSAA (Full Scene Anti-Aliasing). Through time progression has been made to offer this feature through a wide range of resolutions without compromising on performance. Whereas the Geforce3 introduced Quicunx FSSA the Geforce4 takes a new approach in the form of Accuview FSAA. The principle is simple, it is to do what Quicunx did but with better image quality. This technique derives from mixing neighboring pixels both down and up to blend in creating a color with less error thus opening the stage to refining how fine a line actually is on screen. The result is realistic outlines of 3D objects reflecting much more real life than a cheap computer imitation. FSSA in general should delight any users stuck with using a lower end CRT monitor of those lucky enough to own a large TFT LCD panel and wanting to use it in its non native resolution.  -nView Should anyone afford two nice big monitors to then stand in front of you while banging away at the keyboard or rolling the mouse then nView should interest you. When TwinView came out first in the Geforce2 MX the role was to enable multiple monitors to display one Windows desktop. nView improves upon this by use of dual 350MHz RAMDAC's and some neat features ATI's Hydravision offering doesn't currently offer. A luxurious one might call feature is to setup a double right click to open up a web link from your browser, great for reading all those tweak3d.net or indeed other forum messages! Something like this is hard to resist and once used it's difficult to go back, it has to be seen to be truly appreciated. If Windows 2000 transparent effects in early 2000 made you smile then so will nView with its transparent windows dragging effects option, the results are appealing to anyone wanting that cool factor. Once optimizations in the drivers are fixed it should deliver on its promises for a window of any size. nView is a pleasure to use but may not spark a flame amongst all users, after all buying one "good" monitor can be expensive enough. We evaluated nView on a Samsung 1200NF and Samsung 151D LCD display using cloning, and horizontal/vertical spanning. All worked flawlessly however given the native LCD panels XGA resolution, we had to limit the Windows desktop size for proper use on both monitors. Such an encounter would not exist had we used the Samsung 900NF and 1200NF monitors, but at time of testing this combination was impossible to compile due to the lack of a DVI to HD-15 converter in our labs.    |

||

|

| |||

|---|---|---|---|